HDFS

HDFS is structured similarly to a regular Unix filesystem except that data storage is distributed across several machines. It is not intended as a replacement to a regular filesystem, but rather as a filesystem-like layer for large distributed systems to use. It has in built mechanisms to handle machine outages, and is optimized for throughput rather than latency.

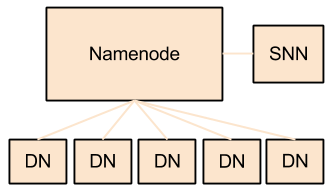

There are two and a half types of machine in a HDFS cluster:

- Datanode - where HDFS actually stores the data, there are usually quite a few of these.

- Namenode - the ‘master’ machine. It controls all the meta data for the cluster. Eg - what blocks make up a file, and what datanodes those blocks are stored on.

- Secondary Namenode - this is NOT a backup namenode, but is a separate service that keeps a copy of both the edit logs, and filesystem image, merging them periodically to keep the size reasonable.

- this is soon being deprecated in favor of the backup node and the checkpoint node, but the functionality remains similar (if not the same)

Data can be accessed using either the Java API, or the Hadoop command line client.

Note that HDFS is optimized differently than a regular file system. It is designed for non-realtime applications demanding high throughput instead of online applications demanding low latency. For example, files cannot be modified once written, and the latency of reads/writes is really bad by filesystem standards. On the flip side, throughput scales fairly linearly with the number of datanodes in a cluster, so it can handle workloads no single machine would ever be able to.

HDFS also has a bunch of unique features that make it ideal for distributed systems:

- Failure tolerant - data can be duplicated across multiple datanodes to protect against machine failures. The industry standard seems to be a replication factor of 3 (everything is stored on three machines).

- Scalability - data transfers happen directly with the datanodes so your read/write capacity scales fairly well with the number of datanodes

- Space - need more disk space? Just add more datanodes and re-balance

- Industry standard - Lots of other distributed applications build on top of HDFS (HBase, Map-Reduce)

- Pairs well with MapReduce - As we shall learn

No comments:

Post a Comment